- Assistance Call Us 800.348.3332 Schedule a Store Visit Email Us Tracking Information Returns & Exchanges Shipping & Delivery Bucherer 1888 Credit Card

Your browser's Javascript functionality is turned off. Please turn it on so that you can experience the full capabilities of this site.

- Rolex Certified Pre-Owned

- Our Selection

- Yacht-Master

Product Actions

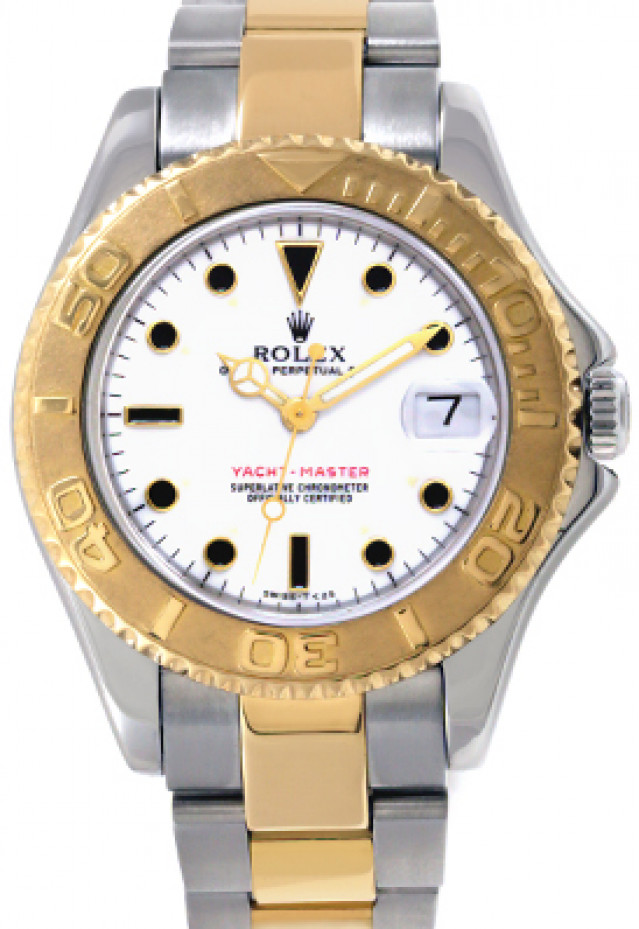

Rolex certified pre-owned yacht-master 1997, 35 mm, steel/yellow gold reference: 68623.

Free Delivery within 2-3 days

Free returns for 14 days

Click & Collect

The two-year international Rolex guarantee

Delivered at the time of sale, the Rolex Certified Pre-Owned guarantee card officially confirms that the watch is genuine on the date of purchase and guarantees its proper functioning for a period of two years from this date, in accordance with the guarantee manual. Find out more

Please indicate your preferred method of contact and we will respond as quickly as possible.

Oyster Perpetual

Yacht-Master

Staying on course, mapping invisible routes.

For those at sea, staying on course is a constant challenge. When dealing with the elements, nothing is certain and constant reaction is required to stay in the right direction. Since its launch in 1992, the Oyster Perpetual Yacht-Master has been equipped with a bidirectional rotatable bezel that facilitates the calculation and reading of navigational time. Elegantly combining functionality and nautical style, this watch has made its mark well beyond its professional realm.

A shared quest for precision

Knowing where you are in space and time, setting a course and sticking to it are vital in navigation. Given its function, the watch is an essential tool for sailors to assess their position. Regarded as the most precise horological instruments in the world, marine chronometers have been certified by astronomical observatories since the 18th century. At the time, the ultimate authority for measuring chronometric precision was the Kew Observatory in Great Britain.

In 1914, the founder of Rolex, Hans Wilsdorf, had one of the brand’s watches tested by this very observatory, which certified it as a chronometer: a first in the watchmaking world for a wristwatch. Since then, renowned sailors, such as Sir Francis Chichester and Bernard Moitessier, have navigated the seas with Rolex wristwatches serving as onboard chronometers.

Matching the precision of marine chronometers was fundamental to Rolex’s watchmaking.

Designed for navigators

Sailing occupies a special place in the world of Rolex. In 1958, the brand partnered the New York Yacht Club, creator of the legendary America’s Cup. Rolex then formed partnerships with several prestigious yacht clubs around the world and became associated with major nautical events – offshore races and coastal regattas.

These strong ties culminated in 1992 with the launch of the Yacht-Master. Boasting the robustness and waterproofness of our Oyster case, this chronometer is fitted with a bidirectional bezel with raised 60-minute graduations to enable navigational time to be calculated and read.

.css-1afbye{font-family:var(--quote-font-family);font-style:var(--quote-font-style);font-weight:var(--quote-font-weight);font-size:clamp(1.5rem, 0.6875rem + 2.0313vw, 3.125rem);line-height:1.3;}figure:not(.removem1epbs8d) .css-1afbye::before,figure:not(.removem1epbs8d) .css-1afbye::after{display:inline;}figure:not(.removem1epbs8d) .css-1afbye::before{content:var(--quote-opening);}figure:not(.removem1epbs8d) .css-1afbye::after{content:var(--quote-closing);}@media (max-width: 30rem){.css-1afbye{line-height:1.4;}}.short-quote .css-1afbye{font-family:var(--quote-font-family);font-style:var(--quote-font-style);font-weight:var(--quote-font-weight);font-size:clamp(1.875rem, 0.6250rem + 3.1250vw, 4.375rem);line-height:1.3;}figure:not(.removem1epbs8d) .short-quote .css-1afbye::before,figure:not(.removem1epbs8d) .short-quote .css-1afbye::after{display:inline;}figure:not(.removem1epbs8d) .short-quote .css-1afbye::before{content:var(--quote-opening);}figure:not(.removem1epbs8d) .short-quote .css-1afbye::after{content:var(--quote-closing);}@media (max-width: 30rem){.short-quote .css-1afbye{line-height:1.4;}} This Yacht-Master 42, which is an outstanding nautical watch, is also proof of Rolex’s firm commitment to yachting.

Precious on land and at sea

Available in three diameters – 37, 40 and 42 mm – and in various precious versions – 18 ct yellow, white and Everose gold – as well as in Everose Rolesor and Rolesium versions, the Yacht-Master is unique in the world of Rolex professional watches. An elegant watch with a sporty character, it was the first to be paired with an Oysterflex bracelet in 2015.

In 2023, after testing under real-life conditions by acclaimed helmsman Sir Ben Ainslie, Rolex launched a new version of the Yacht-Master 42. It is made of RLX titanium, a high-performance material, at once light, robust and corrosion resistant.

A veritable ally at sea, the Yacht-Master also elegantly adorns the wrists of navigators once back on solid ground. With many different versions, it is a model that transcends its seafaring origins. It has become a watch for those who know how to change course without losing sight of the horizon, moving freely.

Yacht-Master 42

Oyster, 42 mm, yellow gold.

E-Mail Us

Live Chat

305.865.0999

$9,450 SALE

Rolex Watch Yacht-Master / Ref. 68623 35mm, Stainless Steel W525332

Do you own this or a similar item and would like to sell to us?

Certified Authentic

Return Policy

Service Warranty

Like New For Life

Have Questions?

305.865.0999

Frequently Asked Questions

You may also like

Thunderbird

Rolex Oyster link rivet band in 18k yellow gold

Oyster Perpetual

Explorer II

Vintage Rolex Oyster band, spring loaded rivet style, in 18k rose gold. Will fit a 19mm lug width watch. 5.75 inch length

Pre-Owned Rolex Mens Watch Details

Rolex Yacht-Master in 18k & stainless steel. Auto w/ sweep seconds and date. 35 mm case size. With papers. **Bank wire only at this price** Ref 68623. Circa 1997. Fine Pre-owned Rolex Watch. Certified preowned Sport Rolex Yacht-Master 68623 watch is made out of Stainless steel on a 18k & Stainless Steel bracelet with a 18k & Stainless Steel Fliplock buckle. This Rolex watch has a 35 x 35 mm case with a Round caseback and Gold Luminescent dial. It is Gray and Sons Certified Authentic and comes backed by our 24-month warranty. Each watch is inspected by our certified in-house Swiss-trained watchmakers before shipment including final servicing, cleaning, and polishing. If you have inquiries about this Rolex Yacht-Master watch please call us toll free at 800-705-1112 and be sure to reference W525332 . Watch is in Excellent condition.

HAVE QUESTIONS?

VIKTORIA I am a decision maker Ready to make a deal Now!

RICH I am a decision maker Ready to make a deal Now!

- Pre-Owned Rolex

- Rolex Yacht-Master

- Rolex 68623

Rolex 68623 Yellow Gold & Steel on Oyster White

| Brand: | Rolex |

| Gender: | Ladies |

| Model Name: | Yacht-Master |

| Model Number: | 68623 |

| Case Size: | 29 mm |

| Case: | Stainless Steel |

| Bezel: | 18kt Yellow Gold, Extruded, 60 Minutes Elapsed Time Unidirectional Rotatable |

| Dial: | White, Black Dots on Gold Markers |

| Bracelet: | 18kt Yellow Gold & Stainless Steel |

| Bracelet Type: | Oyster |

- Have Similar Item To Sell

- Instant Offer

- Immediate Payment

Do you have one to sell?

See what our costumers say about us

Discover all known Rolex Yacht-Master 68623 unique configurations

UNIQUE VARIATIONS OF PREVIOUSLY SOLD Rolex Yacht-Master 68623

- Women's Rolex

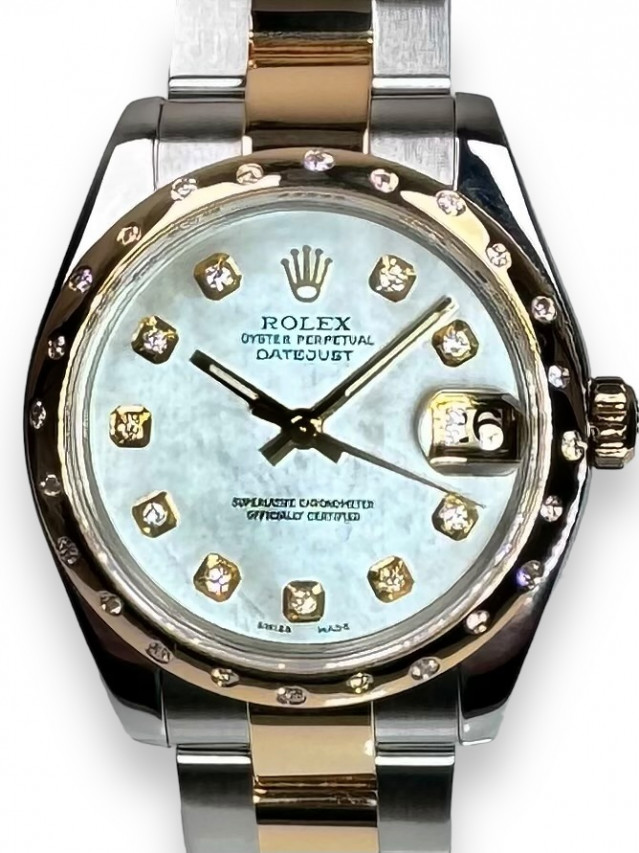

- Rolex Ladies Datejust

- Rolex Ladies Oyster Perpetual

- Rolex Ladies Cellini

Rolex for Women

Similar in Gold & Steel

Rolex Yacht-Master 68623 or similar watches

$8,950 $9,590

You Save: $640

Recently Viewed

- Скидки дня

- Справка и помощь

- Адрес доставки Идет загрузка... Ошибка: повторите попытку ОК

- Продажи

- Список отслеживания Развернуть список отслеживаемых товаров Идет загрузка... Войдите в систему , чтобы просмотреть свои сведения о пользователе

- Краткий обзор

- Недавно просмотренные

- Ставки/предложения

- Список отслеживания

- История покупок

- Купить опять

- Объявления о товарах

- Сохраненные запросы поиска

- Сохраненные продавцы

- Сообщения

- Развернуть корзину Идет загрузка... Произошла ошибка. Чтобы узнать подробнее, посмотрите корзину.

cognitive interview qualitative research

- Search Menu

- Sign in through your institution

- Advance Articles

- Editor's Choice

- Supplements

- Open Access Articles

- Research Collections

- Review Collections

- Author Guidelines

- Submission Site

- Open Access Options

- Self-Archiving Policy

- About Health Policy and Planning

- About the London School of Hygiene and Tropical Medicine

- HPP at a glance

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, defining cognitive interviewing, fitting cognitive interviewing into larger survey tool development, steps in undertaking cognitive interviewing, data availability statement, acknowledgements, ethical approval, conflict of interest statement.

- < Previous

The devil is in the detail: reflections on the value and application of cognitive interviewing to strengthen quantitative surveys in global health

- Article contents

- Figures & tables

- Supplementary Data

K Scott, O Ummer, A E LeFevre, The devil is in the detail: reflections on the value and application of cognitive interviewing to strengthen quantitative surveys in global health, Health Policy and Planning , Volume 36, Issue 6, July 2021, Pages 982–995, https://doi.org/10.1093/heapol/czab048

- Permissions Icon Permissions

Cognitive interviewing is a qualitative research method for improving the validity of quantitative surveys, which has been underused by academic researchers and monitoring and evaluation teams in global health. Draft survey questions are administered to participants drawn from the same population as the respondent group for the survey itself. The interviewer facilitates a detailed discussion with the participant to assess how the participant interpreted each question and how they formulated their response. Draft survey questions are revised and undergo additional rounds of cognitive interviewing until they achieve high comprehension and cognitive match between the research team’s intent and the target population’s interpretation. This methodology is particularly important in global health when surveys involve translation or are developed by researchers who differ from the population being surveyed in terms of socio-demographic characteristics, worldview, or other aspects of identity. Without cognitive interviewing, surveys risk measurement error by including questions that respondents find incomprehensible, that respondents are unable to accurately answer, or that respondents interpret in unintended ways. This methodological musing seeks to encourage a wider uptake of cognitive interviewing in global public health research, provide practical guidance on its application, and prompt discussion on its value and practice. To this end, we define cognitive interviewing, discuss how cognitive interviewing compares to other forms of survey tool development and validation, and present practical steps for its application. These steps cover defining the scope of cognitive interviews, selecting and training researchers to conduct cognitive interviews, sampling participants, collecting data, debriefing, analysing the emerging findings, and ultimately generating revised, validated survey questions. We close by presenting recommendations to ensure quality in cognitive interviewing.

Cognitive interviewing seeks to bridge linguistic, social, and cultural gaps between the researchers who develop surveys and the populations who complete them to improve the match between research intent and respondent interpretation.

This methodology is gaining prominence in global public health but could benefit from wider discussion and application in our field; to this end, we present a description of cognitive interviewing, its value and distinguishing features, and practical guidance for its application.

The methodological musing calls attention to the practical details of its application, including researcher training, allocating adequate time for cognitive interviews, structured debriefs, and systematically revising and testing multiple iterations of the survey tool.

This methodological musing calls attention to cognitive interviewing, a qualitative research methodology for improving the validity of quantitative surveys that has often been overlooked in global public health. Cognitive interviewing is ‘the administration of draft survey questions while collecting additional verbal information about the survey responses, which is used to evaluate the quality of the response or to help determine whether the question is generating the information that its author intends’ ( Beatty and Willis, 2007 ). This methodology helps researchers see survey questions from the participants’ perspectives rather than their own by exploring how people process information, interpret the words used and access the memories or knowledge required to formulate responses ( Drennan, 2003 ).

Cognitive interviewing methodology emerged in the 1980s out of cognitive psychology and survey research design, gaining prominence in the early 2000s ( Beatty and Willis, 2007 ). Cognitive interviewing is widely employed by government agencies in the preparation of public health surveys in many high-income countries [e.g. the Collaborating Center for Questionnaire Design and Evaluation Research in the Center for Disease Control and Prevention ( CDC)/National Center for Health Statistics (2014) and Agency for Healthcare Research and Quality in the Department of Health and Human Services (2019) in the USA and the Quality Care Commission (2019) for the National Health Service Patient Surveys in the UK]. Applications in the global public health space are emerging, including to validate measurement tools undergoing primary development in English and for use in English [e.g. to measure family response to childhood chronic illness ( Knafl et al. , 2007 )]; to support translation of scales between languages [e.g. to validate the London Measure of Unplanned Pregnancy for use in the Chichewa language in Malawi ( Hall et al. , 2013 )] and to assess consumers’ understanding and interpretation of and preferences for displaying information [e.g. for healthcare report cards in rural Tajikistan ( Bauhoff et al. , 2017 )]. However, this methodology remains on the periphery of survey tool development by university-based academic researchers and monitoring and evaluation teams working in global health; most surveys are developed, translated and adapted without cognitive interviews, and publications of survey findings rarely stipulate that cognitive interviews took place as part of tool development processes.

Context: respectful maternity care in rural central Indi a

We used cognitive interviewing to examine survey questions for rural central India, adapted from validated instruments to measure respectful maternity care used in Ethiopia, Kenya and elsewhere in India. This process illuminated extensive cognitive mismatch between the intent of the original questions and how women interpreted them, which would have compromised the validity of the survey’s findings ( Scott et al. , 2019 ). Two examples are provided here.

Cognitive interviews revealed that hypothetical questions were interpreted in unexpected way s

A question asked women whether they would return to the same facility for a hypothetical future delivery. The researchers intended the question to assess satisfaction with services. Some women replied no, and, upon probing, explained that their treatment at the facility was fine but that they had no intention of having another child. Other women said yes, despite experiencing some problematic treatment, and probing revealed that they said this because they were too poor to afford to go anywhere else.

Cognitive interviews revealed that Likert scales were inappropriat e

The concept of graduated agreement or disagreement with a statement was unfamiliar and illogical to respondents. Women did not understand how to engage with the Likert scales we tested (5-, 6- and 10-point scales, using numbers, words, colours, stars, and smiley faces). Most respondents avoided engaging with the Likert scales, instead responding in terms of a dichotomous yes/no, agree/disagree, happened/did not happen, etc., despite interviewer’s attempts to invite respondents to convert their reply to a Likert response. For example, when asked to respond on a 6-point Likert scale to the statement ‘medical procedures were explained to me before they were conducted’, a respondent only repeated ‘they didn’t explain’. Other respondents, when shown a smiley face Likert scale, focused on identifying a face that matched how they felt rather than that depicted their response to the statement in question. For example, when asked to respond to the statement ‘the doctors and nurses did everything they could to help me manage my pain’, a respondent pointed to a sad face, explaining that although the doctors and nurses helped her, since she was in pain her face was ‘like this’ (i.e. sad). Without cognitive interviews, survey enumerators would unknowingly record responses unrelated to the question at hand or would attempt to fit respondent dichotomous answers into Likert scales using whatever interpretation the enumerator saw fit.

Cognitive interviewing recognizes that problems with even one detail of a survey question can compromise the validity of the data gathered, whether it is an improper word, confusing phrasing, unfamiliar concept, inappropriate response option, or other issue. Without cognitive interviews, gaps between question intent and respondent interpretation can persist, severely compromising the quality of data generated from surveys ( Box 1 ). Furthermore, cognitive mismatch is often impossible to detect after data collection. Instead, responses recorded in the survey are taken as ‘true’, regardless of whether the respondents understood and answered the question in the intended manner and regardless of the assistance, adjustment, or interpretation provided by enumerators.

In this article, we argue that cognitive interviewing should be an essential step in the development of quantitative survey tools used in global public health and call attention to the detailed steps of applying this method in the field. We start by reviewing what cognitive interviewing is and consider the varied definitions and use cases in survey tool development. We next outline the recommended steps in survey tool development and then provide an overview of how to go about cognitive interviewing. We close by reflecting on the broader implications of cognitive interviewing.

Cognitive interviewing enables researchers to assess how participants process information to comprehend and respond to survey questions. Cognitive interviews reveal and help correct issues including word choice, syntax, sequencing, sensitivity, response options, and resonance with local world views and realities ( Table 1 ). These factors individually and collectively play a vital role in determining what cognitive domains a respondent accesses and whether these domains align with the construct that researchers are seeking measure. Examples for this paper have been drawn from cognitive interview data collected in rural India for purposes of developing survey tools to assess women’s experiences during pregnancy and childbirth ( Scott et al. , 2019 ), as well as measure a variety of reproductive, maternal and child health outcomes including infant and young child feeding (IYCF) and family planning (FP) ( Lefevre et al. , 2019 ).

Components of survey tools assessed by cognitive interviewing

| Survey tool component assessed . | Explanation . | Example . |

|---|---|---|

| Word choice | Words used in the survey questions may not be understood by respondents, may have unintended alternative meanings, may be overly vague or specific or may be less natural than alternative words | When translating surveys from English to Hindi, we found that professional translators and Hindi-speaking researchers with experience in rural areas often selected formal Hindi words that were unfamiliar to rural women |

| Syntax | Sentences in survey questions may be too complex or too long, reducing respondent capacity to retain key features of the question | The question ‘During your time in the health facility did the doctors, nurses, or other health care providers introduce themselves to you when they first came to see you?’ contained too many words and clauses. By the time the researcher finished reading it, the respondent lost track of the core question |

| Sequencing | The order of questions may be inappropriate. Placing sensitive or emotionally charged questions too early in the survey can be uncomfortable for respondents and damage respondent–enumerator rapport, reducing the likelihood of a respondent providing a truthful and complete response | A survey on respectful maternity care initially asked post-partum women if they were verbally or physically abused during childbirth within the first few survey questions, to ensure that this crucial question was answered before any respondent fatigue set in. However, cognitive interviews revealed that women were uncomfortable with the question and unlikely to disclose abuse without first establishing rapport through a range of less emotionally intense questions |

| Sensitivity | Questions or response options may be too direct or include topics that are insufficiently contextualized, leading to respondent and enumerator discomfort and eroding rapport | When asking women about their birth companions, they found it strange and uncomfortable to be probed about whether male family members were with them |

| Response options | Response options may be insufficient to capture the actual range of responses or may be incomprehensible or uncomfortable for respondents | Likert scales with more than three response options were incomprehensible to most rural Indian women we interviewed. Asking women to estimate the amount of food they gave their child in the 24-hour dietary recall in terms of cups or bowls was considered illogical since roti (flatbread), a common food, does not fit into cups |

| Resonance with local worldviews and realities | Questions may ask about domains of importance to the research team but that do not resonate with respondent views or realities | ‘Being involved in decisions about your health care’ is a domain of global importance in respectful maternity care. However, in rural India, the concept of healthcare workers involving the patient in healthcare decisions was unfamiliar and, when explained, considered undesirable |

| Cognitive mismatch | Questions may access respondent cognitive domains that do not map on to the domains intended by the researchers | Women were asked whether they would recommend the place where they gave birth to a friend, as a proxy for quality of care. However, women frequently responded ‘no’ because they did not have friends, did not want to tell other women what to do or did not think they should make recommendations for other people—which was unrelated to their maternity care experiences |

| Memory | Questions or response options may seek to access respondent memories in ways that are too cognitively demanding | Recalling specific post-partum practices from many months ago may not be possible for some respondents |

| Survey tool component assessed . | Explanation . | Example . |

|---|---|---|

| Word choice | Words used in the survey questions may not be understood by respondents, may have unintended alternative meanings, may be overly vague or specific or may be less natural than alternative words | When translating surveys from English to Hindi, we found that professional translators and Hindi-speaking researchers with experience in rural areas often selected formal Hindi words that were unfamiliar to rural women |

| Syntax | Sentences in survey questions may be too complex or too long, reducing respondent capacity to retain key features of the question | The question ‘During your time in the health facility did the doctors, nurses, or other health care providers introduce themselves to you when they first came to see you?’ contained too many words and clauses. By the time the researcher finished reading it, the respondent lost track of the core question |

| Sequencing | The order of questions may be inappropriate. Placing sensitive or emotionally charged questions too early in the survey can be uncomfortable for respondents and damage respondent–enumerator rapport, reducing the likelihood of a respondent providing a truthful and complete response | A survey on respectful maternity care initially asked post-partum women if they were verbally or physically abused during childbirth within the first few survey questions, to ensure that this crucial question was answered before any respondent fatigue set in. However, cognitive interviews revealed that women were uncomfortable with the question and unlikely to disclose abuse without first establishing rapport through a range of less emotionally intense questions |

| Sensitivity | Questions or response options may be too direct or include topics that are insufficiently contextualized, leading to respondent and enumerator discomfort and eroding rapport | When asking women about their birth companions, they found it strange and uncomfortable to be probed about whether male family members were with them |

| Response options | Response options may be insufficient to capture the actual range of responses or may be incomprehensible or uncomfortable for respondents | Likert scales with more than three response options were incomprehensible to most rural Indian women we interviewed. Asking women to estimate the amount of food they gave their child in the 24-hour dietary recall in terms of cups or bowls was considered illogical since roti (flatbread), a common food, does not fit into cups |

| Resonance with local worldviews and realities | Questions may ask about domains of importance to the research team but that do not resonate with respondent views or realities | ‘Being involved in decisions about your health care’ is a domain of global importance in respectful maternity care. However, in rural India, the concept of healthcare workers involving the patient in healthcare decisions was unfamiliar and, when explained, considered undesirable |

| Cognitive mismatch | Questions may access respondent cognitive domains that do not map on to the domains intended by the researchers | Women were asked whether they would recommend the place where they gave birth to a friend, as a proxy for quality of care. However, women frequently responded ‘no’ because they did not have friends, did not want to tell other women what to do or did not think they should make recommendations for other people—which was unrelated to their maternity care experiences |

| Memory | Questions or response options may seek to access respondent memories in ways that are too cognitively demanding | Recalling specific post-partum practices from many months ago may not be possible for some respondents |

While it is usually possible to identify and remedy linguistic and syntax issues in survey questions, cognitive interviewing cannot always solve deeper problems with survey research. Cognitive interviews may illuminate question failures arising from a mismatch between the underlying concepts that the survey attempts to measure and the concepts that resonate with the respondent’s worldview and reality ( Schuler et al. , 2011 ; Scott et al. , 2019 ). In these cases, question revision will not achieve cognitive alignment between researcher and participant. Instead, researchers must drop questions from the survey and potentially generate new items.

There are many terms and approaches used for strengthening surveys, some of which may encompass cognitive interviewing or include components of it without applying the label ( Table 2 ). We argue however, that cognitive interviewing should be a standalone approach integrated into a larger process of survey tool development.

Approaches to strengthening surveys

| Approach . | Description . | Comparison to cognitive interviewing . | Issue . |

|---|---|---|---|

| Expert review | Subject area experts review the survey tool and judge how well each questionnaire item truly reflects the construct it is intended to measure | Experts are unable to predict how the survey respondents will interpret the questions | |

| Respondent-driven pretesting | A small group of participants with the same characteristics as the target survey population complete the survey. Researchers elicit feedback during the survey or at the end through debriefings. Feedback elicitation can include targeted probes about questions that appeared problematic, in-depth exploration of each question, probing on a random sub-set of questions, or asking participants to rate how clear the question was | ) | Low methodological clarity: can be the same as cognitive interviewing or quite different |

| Translation and back translation | After translating a survey from the origin to the target language, a different translator ‘blindly’ translates the survey back. Differences are then compared and resolved ( ) | Involves bilingual translators whose world view and experience do not match the target population’s, making them unable to comment on the tool’s appropriateness | |

| Pilot testing | Enumerators administer the survey to a small group of participants with the same characteristics as the target survey in as close to real world conditions as possible | Focuses on the mechanics of implementation while cognitive testing focuses on the survey questions achieving shared understanding between researcher intent and respondent interpretation |

| Approach . | Description . | Comparison to cognitive interviewing . | Issue . |

|---|---|---|---|

| Expert review | Subject area experts review the survey tool and judge how well each questionnaire item truly reflects the construct it is intended to measure | Experts are unable to predict how the survey respondents will interpret the questions | |

| Respondent-driven pretesting | A small group of participants with the same characteristics as the target survey population complete the survey. Researchers elicit feedback during the survey or at the end through debriefings. Feedback elicitation can include targeted probes about questions that appeared problematic, in-depth exploration of each question, probing on a random sub-set of questions, or asking participants to rate how clear the question was | ) | Low methodological clarity: can be the same as cognitive interviewing or quite different |

| Translation and back translation | After translating a survey from the origin to the target language, a different translator ‘blindly’ translates the survey back. Differences are then compared and resolved ( ) | Involves bilingual translators whose world view and experience do not match the target population’s, making them unable to comment on the tool’s appropriateness | |

| Pilot testing | Enumerators administer the survey to a small group of participants with the same characteristics as the target survey in as close to real world conditions as possible | Focuses on the mechanics of implementation while cognitive testing focuses on the survey questions achieving shared understanding between researcher intent and respondent interpretation |

Survey tool development starts with item generation, which may include a variety of approaches, including in-depth interviews with respondents, review of literature and existing survey tools, and expert review. This is followed by translation, cognitive interviewing, content modification, and then pilot testing ( Figure 1 ).

Situating cognitive interviewing within the larger process of tool development

What is Kilkari? Kilkari is India’s flagship direct-to-beneficiary messaging programme. Pregnant and post-partum women receive one weekly phone call containing a short (1.5 minute) pre-recorded health message on topics including preparing for childbirth, caring for newborns, IYCF, and FP.

Kilkari evaluation: The Kilkari evaluation ( Lefevre et al. , 2019 ) was a randomized controlled trial in rural Madhya Pradesh, India. In 2018, 5095 pregnant women were enrolled and randomized to receive Kilkari or not. An endline survey in 2020, when the study participants were 12–17 months post-partum, assessed whether receiving Kilkari changed women’s knowledge or practice.

Endline Kilkari evaluation survey tool: The draft endline survey included 12 modules to assess study participants’ knowledge and self-reported practice on topics covered by Kilkari, as well as information on socio-economics, decision-making power in the household, interaction with community health workers, exposure to Kilkari, and media consumption patterns. Draft questions were drawn from a mix of tools identified in the literature, including the Demographic and Health Survey and Multiple Indicator Cluster Surveys, and developed by other academic teams.

Greater uptake of cognitive interviewing and explicit description of the process would be a strong contribution to improving the validity of survey research in this field. In this section, we discuss the steps in conducting rigorous cognitive interviews: defining scope, selecting researchers, training, sampling, data collection, and analysis. We draw illustrative examples from our experience with cognitive interviews to refine survey content for the Kilkari evaluation ( Box 2 ).

Defining the scope of cognitive interviews

In an ideal scenario, almost all questions in a data collection tool would be tested. However, time and available resources often limit how much of the survey can be tested. Examining a survey question during a cognitive interview takes far longer than asking the same question during the field survey itself. In a cognitive interview, each survey question must first be asked and answered in a quantitative manner and then discussed in an in-depth qualitative manner through a series of probes to determine how the respondent interpreted the question.

Multiple cognitive interview guides can be developed to examine sub-components of the survey questions. Thus, a cognitive interview guide can be developed to assess one portion of the survey’s questions with one set of participants, while a second interview guide can be developed to assess a different set of questions from the survey with a different set of participants, and so on. But even with multiple cognitive interview guides, researchers will likely still have to prioritize a sub-sample of questions. Selecting which questions to test is a judgement decision that can be guided by focusing on the questions most central to measuring the key outcomes of interest and the questions that are new, conceptually complex, or have never been applied to this respondent population. It is also important to keep blocks of questions (e.g. subject modules) together since they build on and relate to one another. Box 3 presents an illustrative example drawn from our team’s process of defining the scope of cognitive interviews in the Kilkari evaluation.

Priority areas of the tool selected for CI: The draft endline survey tool contained 180 questions and was to take 90 minutes to administer in the field, at about 30 seconds per question. Although we wanted to test each question, it was not feasible to do so. While the time required to test each question in the cognitive interview varies widely, we found it appropriate to allocate each draft survey question at least 3 minutes in the cognitive interview: about 30 seconds to simply ask the question and attempt to record an answer mimicking the survey data collection, and then an additional 2.5 minutes for cognitive probing. Attempting to test each question of a 180-question long survey would require an (impossibly long) nine-hour cognitive interview.

Since the Kilkari evaluation’s priority outcomes were infant and young child feeding and the use of modern contraception, we focused on cognitive interviews for the questions on these topics. We went through the draft survey tool and identified all the questions on these two topics, which were spread across modules on knowledge, practice, decision-making power and discussion. There were approximately 60 questions, which would still take 3 hours to cover in one cognitive interview. We thus decided to split them into two separate sets ( Figure 2 ).

How much of the survey can you test through cognitive interviews?

Even with limiting the number of survey questions in our cognitive testing guide to just 30, many interviews still had to wrap up before completing all 30 questions. Sometimes respondents had to leave early or were distracted. Many times the researchers found that initial questions took longer than anticipated and thus had to end the interview before completing the guide. This was particularly the case with the least educated respondents and the earlier draft version of questions. In these cases, participant comprehension was very low and thus the researchers spent a long time explaining questions to participants and seeking to understand the various ways in which questions failed.

Key learning: You can test far fewer questions in a cognitive interview than you can cover in a survey of comparable duration (usually 1.5 hours). Multiple cognitive interview sets may be required to test all priority survey questions.

Selecting and training researchers to conduct cognitive interviews

Cognitive interviewing requires high-level analytical understanding, linguistic insight, and collaboration among researchers. Researchers must be fully proficient in the language spoken by the target population, as well as the original language of the draft questions, in cases where translation is involved. This proficiency is vital so that each researcher understands the nuances of the original questions and can carefully adapt the phrasing of the questions to ensure local understanding. The effects of similarities and differences in interviewer/participant’s gender, age, sexuality, class, and ethnicity have been considered in the qualitative research methodological literature ( Fontana and Frey, 1994 ; Hsiung, 2010 ). For cognitive interviewing, the same considerations apply, wherein interviewer identity must be considered in light of the topic being studied and the research context ( Hsiung, 2010 ). Ideal researchers are strong qualitative interviewers, so that they can undertake appropriate probing and use verbal and nonverbal approaches to setting respondents at ease and handling respondent discomfort with the potentially unfamiliar process of the cognitive interview. They should also be familiar with quantitative survey research, so that they can understand how quantitative enumerators will administer questions and seek to determine appropriate answers. Box 4 discusses team composition for the Kilkari endline survey cognitive interviews.

Team structure: Our eight-person research team consisted of five female researchers, a male research team manager, a male logistics coordinator and a female team lead. The five researchers were all master’s level social scientists with prior qualitative research experience and training. The researchers worked in pairs, with the fifth and most junior researcher providing backup support. The research manager worked with the logistics coordinator to handle day-to-day logistics, sampling and data management. They also handled sensitive community relationship issues, such as taking curious onlookers (particularly husbands, who were sometimes keen to jump in and answer questions for their wives) away from the cognitive interview. The research lead and the research manager conducted training, developed the research protocol, and ran debriefs.

Profile and selection of researchers: Researchers were fluent in Hindi and English, had prior qualitative experience and where possible, and had worked previously in Madhya Pradesh. Additional experience with and sensitivity to key issues relevant to successful qualitative interviewing in rural India included: awareness of gender and caste power dynamics; knowledge of rural Indian community dynamics including health system dynamics; capacity to effectively probe; and understanding of rapport-building.

Key learnings: Experienced researchers, ideally with an understanding of both quantitative and qualitative data collection, are required and should work in pairs (one to lead the interview and one to take detailed notes). Fluency in the survey’s starting language and target language are vital in cases where the survey is translated.

Training: Training was composed of the following modules:

Overview of the entire Kilkari evaluation;

Overview, objectives and sampling for cognitive testing within the Kilkari evaluation;

Principles of cognitive interviewing;

Findings from earlier cognitive interviewing on other topics to showcase the types of issues identified through this research process and how these cognitive failures were resolved to strengthen another survey tool;

Principles of qualitative interviewing;

Research ethics and consent processes;

Data management, cover sheets, field logistics, and safety;

In-depth lecture and discussion on research topic 1: infant and young child feeding including recommended practices on exclusive breastfeeding, what exclusive breastfeeding means, and recommended practices on complementary feeding;

Question-by-question examination of the cognitive interview guide on infant and young child feeding (IYCF) to ensure that each researcher understood the underlying intent of each question;

Role play to practice cognitive interviewing;

In-depth lecture and discussion on research topic 2: family planning;

Question-by-question examination of the cognitive interview guide on family planning to ensure that each researcher understood the underlying intent of each question.

Modules 9 and 12 required two days each. We examined each survey question to be tested in the field—including the answer options—to thoroughly understand the question’s intent, assess the Hindi translation, and hypothesize potential areas of confusion that could arise. We also examined the pre-developed cognitive probes for each survey question and edited them where necessary (more on this in Box 7 ). We furthermore discussed how we could handle potential participant reactions to the questions.

We covered Modules 1–10 in the first week-long training. We then conducted our data collection on IYCF. Only once that data collection process was complete did we proceed to Modules 11 and 12. In separating exposure to the two different cognitive interview topics and guides, we ensured that the team was immersed in and focused on only one area at a time, and did not forget the family planning content while working on IYCF. While in many cases piloting is indicated, the richness of initial data exceeded expectations and the resulting interviews were included in final analyses.

Key learnings: Some researchers initially struggle to understand the intent of cognitive interviewing and struggle to wear two hats; they must toggle between ‘quantitative enumerator mode’ wherein they read the question as it is written and attempt to elicit a response as if conducting a quantitative survey, and ‘qualitative interviewer mode’ wherein they explore what the respondent was thinking about and draw from narrative explanations to access memories or opinions. Role play during training and close observation of the interviews is necessary to ensure the research team truly understands the intent of cognitive interviews and are capable of implementing this data collection methodology.

Participant sampling

Participants in cognitive interviews must be drawn from the same profile as the intended survey respondents. It is difficult to predict how many participants will need to be sampled in order to capture all the cognitive failures with the survey questions. A relatively small number of well-conducted cognitive interviews can yield an enormous amount of rich information, particularly when there is a large cultural and linguistic gap between the researchers and respondent population. Our research has found reasonable evidence of saturation with a total of 20–25 participants over three rounds, which broadly aligns with recommendations from high-income countries, such as the US Center for Disease Control and Prevention’s guidance of 20–50 respondents ( CDC/National Center for Health Statistics, 2014 ) and lead American cognitive interviewing methodologist Gordon Willis’s 8–12 subjects per round, multiplied by 1–3 rounds ( Willis, 2014 ). Direct comparison of sample sizes across studies is often inappropriate because they involve testing different numbers of questions over different numbers of rounds, with varying types of respondent groups, and some involve translation while others do not. Published literature highlights a range of sample sizes, including 10 urban Greenlandic residents for a sexual health survey available in three languages ( Gesink et al. , 2010 ); 15 people per language group per round (four languages, two rounds and total of 120 interviews) for a women’s empowerment in agriculture survey in Uganda ( Malapit, Sproule and Kovarik , 2016 ); 24 people across seven ethnic groups for a mental health survey in the UK without translation ( Care Quality Commission, 2019 ); 34 people stratified for age, gender, education level and location in rural Bangladesh for assessing the cultural suitability of a World Health Organization (WHO) quality of life assessment ( Zeldenryk et al. , 2013 ); 20 women and 20 men to improve a healthcare report card in Tajikistan ( Bauhoff et al. , 2017 ); and 49 women in rural Ethiopia to assess the resonance of a WHO question on early initiation of breastfeeding ( Salasibew et al. , 2014 ). The following three suggestions can help guide sampling in an efficient and rigorous manner.

First, focus on sampling participants from within the survey’s target population who are most likely to struggle with the survey; these are usually the least educated and most marginalized people ( Box 6 ). Interviewing these participants will reveal the weaknesses of the survey most rapidly and create the opportunity to adapt the survey to be comprehensible to the entire target population. While some researchers recommend engaging a range of respondents ( Willis, 2005 ), our experience in rural India found that participants with higher than average education and exposure were far less useful in identifying issues than participants with the lowest education and exposure.

Cognitive interview population: Since the endline survey would be conducted among the 5095 women enrolled in our evaluation 1–1.5 years after they gave birth, our cognitive interviews were conducted among participants with a similar profile: rural women in Madhya Pradesh who had access to mobile phone and who are mothers to a child between 12 and 17 months in age. Within this profile, we skewed our sample towards women with low levels of education, from marginalized castes, and in lower socio-economic strata.

Sample: We conducted two sets of cognitive interviews: one on IYCF ( n = 21) and one on family planning (FP) ( n = 24) ( Table 3 ). Each set required three rounds of interviews, with the questions revised twice: after Round 1 and after Round 2. Round 1 and Round 2 involved a higher number of respondents because we sought sufficient data to understand and document the range of cognitive failures associated with the draft survey questions. Round 3 required fewer respondents because by that stage we were generally confirming that the questions were working as intended. Concentrating on lower literacy and marginalized women was highly efficient at exposing problems with the draft questions and enabling us to reduce the number of interviews necessary. With family planning, we set out to test a portion of questions that were to be asked only of women who had become pregnant in the year since the birth of their previous child. However, this event was relatively rare and we considered it inappropriate to screen women at enrolment for this event, so we oversampled in hopes of including at least a few women who would not skip out of this portion of questions.

Sample of participants for cognitive interviewing in Kilkari

| Topic of survey questions . | Round 1 . | Round 2 . | Round 3 . | Total . |

|---|---|---|---|---|

| Set 1: IYCF | 7 | 8 | 6 | 21 |

| Set 2: FP | 13 | 6 | 5 | 24 |

| Topic of survey questions . | Round 1 . | Round 2 . | Round 3 . | Total . |

|---|---|---|---|---|

| Set 1: IYCF | 7 | 8 | 6 | 21 |

| Set 2: FP | 13 | 6 | 5 | 24 |

Key learnings: We found that as few as six respondents per round was sufficient to expose cognitive failures and enable revision for the next iteration. Focus on sampling the respondents who are most likely to struggle to comprehend the survey questions—generally those within your target population who have the lowest exposure or education. You can probably conduct an unlimited number of cognitive interviews and continue identifying small potential improvements. However, returns diminish—we found three rounds to be sufficient.

Second, think about sampling in terms of iterations. Cognitive interviewing requires an initial round of interviews using the first version of the survey. Then, the team will revise the survey based on detailed debriefing and take version two to the field for another round of cognitive interviews. Additional rounds of revision are required until the survey achieves cognitive match between researcher intent and participant comprehension ( Figure 1 ). The number of respondents required may reduce from iteration to iteration as the survey questions become increasingly more appropriate to the local context.

Third, aim to include participants whose experiences exhaust the domains covered by the survey ( Beatty and Willis, 2007 ). If all of your participants skip out of a certain section of the survey, you will not be able to test the questions in this section. Ideally, your recruitment strategy can pre-identify people who will complete specific sections of the survey. However, if it is difficult to pre-identify respondents who have experienced specific domain of interest that have low prevalence, you will have to increase your sample size.

Data collection

Cognitive interviewing begins with first asking the original survey question exactly as it is written and recording the respondent’s answer using the original response options. The interview then proceeds by eliciting feedback from the respondent to understand how they interpreted the question and why they gave the response provided. Two main approaches have been used for eliciting this feedback: (1) probing and (2) ‘think aloud’ ( Table 4 ).

Approaches to eliciting feedback in cognitive interviews

| Approach . | Description . | Benefits . | Drawbacks . |

|---|---|---|---|

| Think aloud | Participant talks through their mental processes and memory retrieval as they interpret questions and formulate answers ( , 2007) | ( ) | ( ; , 2013) |

| Probing | : Researcher uses pre-developed questions to interview participant about their interpretation of the question, such as ‘what does [word] mean to you?’ or ‘why did you say [answer]?’ ( ) : Research formulates probes during the cognitive interview to explore emergent issues, such as ‘Earlier you said you had never done [activity] but now you said you had completed [sub-activity]. Why did you say that?’ ( ; , 2013) | ( , 2013) |

| Approach . | Description . | Benefits . | Drawbacks . |

|---|---|---|---|

| Think aloud | Participant talks through their mental processes and memory retrieval as they interpret questions and formulate answers ( , 2007) | ( ) | ( ; , 2013) |

| Probing | : Researcher uses pre-developed questions to interview participant about their interpretation of the question, such as ‘what does [word] mean to you?’ or ‘why did you say [answer]?’ ( ) : Research formulates probes during the cognitive interview to explore emergent issues, such as ‘Earlier you said you had never done [activity] but now you said you had completed [sub-activity]. Why did you say that?’ ( ; , 2013) | ( , 2013) |

Probing requires that enumerators use a combination of scripted and unscripted prompts to guide the directionality of the interview, while ‘think aloud’ asks the respondent to verbalize their thoughts while interpreting the question and formulating their response ( Willis, 2005 ). While some researchers find success incorporating the think aloud approach ( Ogaji et al. , 2017 ), probing is gaining consensus as the ideal method ( Willis, 2014 ) and is particularly appropriate in global public health when working with respondents who find it easier to answer questions than verbalize their thought process ( Zeldenryk et al. , 2013 ). Even with probing, careful effort must be made to manage the shortcomings of this method. Researchers must provide clear explanation that the exercise seeks participant feedback on the questions and that any confusion or incomprehension is entirely the research team’s fault, not the participant’s. Box 7 provides an example cognitive interview question and reflections on the data collection process.

Data collection tool: The data collection tool consisted of each draft survey question (and its answer options) followed by suggested scripted probes and comments about areas to explore. Researchers were also strongly encouraged to use emergent probes based on specific information that arose during their interview. Figure 3 provides an example question from the IYCF cognitive interview guide.

Example question from the IYCF cognitive interview guide

Data collection experience: We found that some participants struggled to understand the purpose of the interview and felt embarrassed or annoyed by the probes. Some researchers initially struggled to move beyond the scripted probes, at times failing to probe on issues that arose and demanded attention. During debriefs, we dissected each question and subsequent probing and emphasized the importance of unscripted probing to better understand respondent’s cognitive processes. For instance, if a respondent said that a baby should be breastfed 3 hours after birth, and we knew from other questions in the survey that the respondent had a caesarean delivery, the interviewer had to probe to determine whether the participant experienced delayed breastfeeding due to her post-operation recovery and whether her reply on when babies should breastfeed was actually her description of when her baby was breastfed. For another example, if a participant said she had not heard of condoms when directly asked in the knowledge section and then later said that she had used condoms when asked about her use of birth control, the researcher had to have the insight to circle back to the initial knowledge question about condoms to determine if the initial response was driven by low comprehension (maybe we used an unfamiliar word for condom) or discomfort (shyness) or another factor.

Key learnings: Researchers need deep familiarity with the tool and subject matter to successfully combine scripted and emergent probing. Rapport must be developed with the research participants and they must be regularly reassured and reminded that any confusion caused by the survey questions is the research team’s ‘fault’ and not theirs.

Data collection ideally requires two researchers to conduct every interview: one to lead the questioning and one to take responsibility for notetaking and to support the questioning. While in some qualitative interviews junior researchers can serve as appropriate notetakers, for cognitive interviewing experienced, trained researchers should perform this role. Notetaking is essential in cognitive interviewing because debriefs and rapid revision of survey questions depend on detailed notes being available for analysis after the interview. Notetakers must be trained to record the various forms of cognitive failure that occur during the interview as well as non-verbal feedback from the respondent. While recordings of the interviews can also be reviewed during the debriefs, reviewing the whole audio file or generating and reading a complete transcript requires far more time than is available in the field. High-quality notes are the backbone of the rapid field-based analysis, discussed next, that enables production of revised survey questions.

Debriefs and analysis

To ensure the robustness of findings, cognitive interviewing requires alternating data collection with team debriefing sessions from which revisions to the tool may emerge. This process is iterative with resulting changes to language and tool content requiring additional testing until the final round of cognitive interviews showcase high comprehension amongst respondents.

Box 8 showcases the field work approach from the Kilkari endline survey cognitive interviewing, which found that 1.5 days of debriefing time was required for every 7–8 interviews conducted. For every survey question being tested, researchers will need to budget at least 3 minutes during the interview and an hour during the debrief to discuss what each respondent said for that question and consider how to improve the question. Simple questions that work well take the least amount of time—perhaps less than 3 minutes during the interview to establish that the respondent interpreted the question as expected and another 10 minutes during the debrief to clarify that all interviews had similar success. Questions with translation problems demand more time. Questions with deeper conceptual issues, such as entire concepts failing to resonate with respondent worldview, take the most time. The schedule for data collection and the steps used in debriefing are discussed in Box 8 .

Phase 1. Revising the survey tool: We aimed to conduct approximately six cognitive interviews in one day of fieldwork. After collecting data through about eight interviews on the first version of the instrument, we required 1.5 days for debrief and revision before returning to the field to test the second iteration of the tool ( Table 5 ).

Illustrative schedule of 1 month of cognitive interview field work

| Day 1 . | Day 2 . | Day 3 . | Day 4 . | Day 5 . | Day 6 . | Day 7 . |

|---|---|---|---|---|---|---|

| Training, including topic lecture and discussion on IYCF and detailed review of the IYCF cognitive interview guide | Break | |||||

| 5 cognitive interviews (CIs) on IYCF version 1 | Morning: 3 CIs on IYCF version 1 Afternoon: Debrief | Continue debrief and revise survey questions, create IYCF version 2 | 5 CIs on IYCF version 2 | Morning: 2 CIs on IYCF version 2 Afternoon: Debrief | Continue debrief and revise survey questions, create IYCF version 3 | Break |

| 6 CIs on IYCF version 3 | Last debrief and revisions to create final version of IYCF questions | Topic lecture and discussion on FP and detailed review of the FP cognitive interview guide | 6 CIs on FP version 1 | 7 CIs on FP version 1 | Break | |

| Debrief and revise survey questions | Additional debrief and revision, create FP version 2 | 6 CIs on FP version 2 | Debrief and revise survey questions, create FP version 3 | 5 CIs on FP version 3 | Last debrief and revisions to create final version of FP questions | Break |

| Day 1 . | Day 2 . | Day 3 . | Day 4 . | Day 5 . | Day 6 . | Day 7 . |

|---|---|---|---|---|---|---|

| Training, including topic lecture and discussion on IYCF and detailed review of the IYCF cognitive interview guide | Break | |||||

| 5 cognitive interviews (CIs) on IYCF version 1 | Morning: 3 CIs on IYCF version 1 Afternoon: Debrief | Continue debrief and revise survey questions, create IYCF version 2 | 5 CIs on IYCF version 2 | Morning: 2 CIs on IYCF version 2 Afternoon: Debrief | Continue debrief and revise survey questions, create IYCF version 3 | Break |

| 6 CIs on IYCF version 3 | Last debrief and revisions to create final version of IYCF questions | Topic lecture and discussion on FP and detailed review of the FP cognitive interview guide | 6 CIs on FP version 1 | 7 CIs on FP version 1 | Break | |

| Debrief and revise survey questions | Additional debrief and revision, create FP version 2 | 6 CIs on FP version 2 | Debrief and revise survey questions, create FP version 3 | 5 CIs on FP version 3 | Last debrief and revisions to create final version of FP questions | Break |

Components of debriefs:

All researchers who conducted and took notes during the cognitive interviews attend, with the lead researcher serving as facilitator.

The debrief begins with collecting consent forms, uploading of audio recordings, and entering basic data information (assigning each interview a unique ID and documenting the duration, location, respondent age, etc.) into a data management system.

A spreadsheet is then used to document and systematize the debrief. Our team found success using rows to list the quantitative survey questions and response options and columns to list each interview by unique ID.

The team proceeds survey question by survey question. For each survey question, the researchers draw from the interview notes and occasionally from reviewing the audio recordings to document and discuss how the participant(s) that they interviewed responded to the question, what answer they provided (if any), and what additional information the cognitive probing elicited. The researchers and/or facilitator write extensive notes in the spreadsheet for each question to summarize what was said for each participant.

Revisions—rewording, rewriting, reordering, or removing questions—are then made by the entire team to attempt to resolve issues. These revisions are documented in the spreadsheet by adding a new column next to the original question.

After each question has been discussed, each interview participant’s responses have been shared, and each revision has been formulated in the spreadsheet, a revised cognitive interview guide is developed, with updated probes as needed.

The debrief closes with a discussion of research challenges, logistical considerations for the next day’s fieldwork, and participant sampling.

Phase 2. Preparation of peer review manuscripts (optional)

Often the end goal of cognitive interviewing is the production of a revised survey instrument that is valid and locally grounded. If this is the case, by the end of the final debrief, the team is finished. However, in cases where additional dissemination of the results of the cognitive interviewing is warranted, the cognitive interviews should be transcribed for analysis. When necessary, they also have to be translated, which demands extreme care. Significant portions of text in the original language must be retained to capture nuance in meaning around vocabulary words. The researchers should themselves carry out or at least check the translations. Thematic analysis can then be used to classify the text segments in the transcripts according to the cognitive failures exemplified.

Key learnings: Each cognitive interview generates an enormous amount of data that must be documented and used for subsequent revisions in the field. Cognitive interviewing data collection must be balanced with extensive time allocated for debriefing and revision.

Support to quantitative survey training

Once the survey has achieved strong performance in the target population, cognitive interviewing is complete. The larger survey enumeration team will be formed and trained. It is useful to send some or all of the cognitive interview researchers to support the quantitative enumerator training and pilot testing. The cognitive interviewers can explain to the quantitative survey team why questions are worded and ordered the way they are and how to handle the types of responses that may arise in the population.

The devil is in the details when it comes to cognitive interviewing—in terms of both the quantitative survey that this method hones and the cognitive interviewing method itself. Cognitive interviewing focuses on getting each detail of a survey question right to ensure valid data collection. Each word chosen, the exact syntax used, the response options provided (yes/no, Likert, etc.), the addition or removal of examples, the question styles selected (such as hypotheticals and true/false statements), and the resonance of the underlying constructs being assessed will all influence the alignment of researcher intent and respondent interpretation and response. The application of cognitive interviewing also demands careful attention to detail. Researchers must allocate adequate time and attention to each stage of the process and must ground difficult decisions in strong methodological logic. Cognitive interviewing demands tough decisions on which questions to test to ensure that the scope of the exercise is appropriate. The research team must be carefully selected and trained so that they can set respondents at ease and probe effectively to identify and document cognitive failures in real time. Research participants most likely to yield rich data must be sampled. The cycles of interviews, debriefing, analysis, and revision must be structured, meticulous and well documented.

As the methodology of cognitive interviewing continues to evolve in this field, the recommendations in Table 6 to ensure quality can help develop standards for research rigour.

Recommendations to ensure the quality of cognitive interviews

| Component . | Recommendations . | Rationale for recommendations . |

|---|---|---|

| Scope of survey tool tested | ||

| Developing the cognitive interview guide | ||

| Recruiting and training researchers | ||

| Participant sample characteristics | ||

| Conducting interviews | ||

| Debrief and analysis | ||

| Supporting quantitative survey enumerator training |

| Component . | Recommendations . | Rationale for recommendations . |

|---|---|---|

| Scope of survey tool tested | ||

| Developing the cognitive interview guide | ||

| Recruiting and training researchers | ||

| Participant sample characteristics | ||

| Conducting interviews | ||

| Debrief and analysis | ||

| Supporting quantitative survey enumerator training |

Survey research is fundamental to shaping our understanding of health systems. Surveys may aim to measure a range of outcomes, including population health, practices, care-seeking, attitudes towards services, and knowledge on health issues and topics. Researchers, practitioners, and policymakers rely on survey data to assess the scope of health or health system problems, prioritize the distribution of resources, and evaluate the effectiveness of programmes and interventions. It is thus crucial that researchers ensure that survey instruments are valid, i.e. that they truly measure what they intend to measure. Cognitive interviewing must be recognized as a fundamental validation step in survey development ( Beatty and Willis, 2007 ; Willis and Artino, 2013 ), alongside literature review, expert consultation, and drawing from previously developed survey tools ( Sullivan, 2011 ).

Ultimately, the need for cognitive interviewing in global public health arises from a gap, whether linguistic, cultural, or socioeconomic, between researchers and respondents. The greater this gap, the more space there is for cognitive mismatch to occur, leading to invalid research findings, and the more important cognitive interviewing will be in reducing this divergence. At the low-risk end of the spectrum are those with the smallest gap, such as surveys that are developed, administered, and analysed by the same population that completes them, as may occur during participatory action research. At the high-risk end of the spectrum with the greatest gap between researchers and respondents are surveys developed by researchers in one setting and adapted by another research group for use in a different setting, such as surveys developed in English in Western contexts and then translated to other languages and administered in non-Western settings. Cognitive interviewing may identify the most egregious survey question failures in the latter instance but can also identify surprising gaps between researcher intent and respondent interpretation even in the former.

The data underlying this article will be shared on reasonable request to the corresponding author.

This work was funded by the Bill and Melinda Gates Foundation (OPP1179252).

This work was made possible by the Bill and Melinda Gates Foundation, and we particularly acknowledge the support of the following people there: Diva Dhar, Suhel Bidani, Rahul Mullick, Suneeta Krishnan, Neeta Goel and Priya Nanda. We futher thank BBC Media Action, Dan Harder at the Creativity Club for edits to the overarching framework, and the qualitative researchers Manjula Sharma, Dipanwita Gharai, Bibha Mishra, Namrata Choudhury, Aashaka Shinde, Shalini Yadav, Anushree Jairath, and Nikita Purty.

The examples used in this methodological musing article were drawn from research that received ethical approval at Sigma, Delhi, India (10041/IRB/D/17-18) and Johns Hopkins University, Baltimore, USA (00008360).

The authors declare that they have no conflict of interest.

Agency for Healthcare Research and Quality in the Department of Health and Human Services . 2019 . Conducting Cognitive Testing for Health Care Quality Reports . https://www.ahrq.gov/talkingquality/resources/cognitive/index.html , accessed 1 April 2021 .

Bauhoff S , Rabinovich L , Mayer LA . 2017 . Developing citizen report cards for primary health care in low and middle-income countries: results from cognitive interviews in rural Tajikistan . PLoS One 12 : 1 – 14 .doi: 10.1371/journal.pone.0186745 .

Google Scholar

Beatty PC , Willis GB . 2007 . Research synthesis: the practice of cognitive interviewing . Public Opinion Quarterly 71 : 287 – 311 .doi: 10.1093/poq/nfm006 .

Care Quality Commission . 2019 . 2019 Community Mental Health Survey: Quality and Methodology Report . pp. 1 – 20 . https://www.nhssurveys.org , accessed 15 March 2021 .

CDC/National Center for Health Statistics . 2014 . National Center for Health Statistics: Cognitive Interviewing . https://www.cdc.gov/nchs/ccqder/evaluation/CognitiveInterviewing.htm , accessed 1 April 2021 .

Drennan J . 2003 . Cognitive interviewing: verbal data in the design and pretesting of questionnaires . Journal of Advanced Nursing 42 : 57 – 63 .doi: 10.1046/j.1365-2648.2003.02579.x .

Fontana A Frey JH . 1994 . Interviewing: the art of science. In: Denzin NK , Lincoln YS (eds). The Handbook of Qualitative Research . Thousand Oaks, CA: SAGE , pp. 361 – 76 .

Google Preview

Gesink D , Rink E , Montgomery-Andersen R , Mulvad G , Koch A . 2010 . Developing a culturally competent and socially relevant sexual health survey with an urban arctic community . International Journal of Circumpolar Health 69 : 25 – 37 .doi: 10.3402/ijch.v69i1.17423 .

Hall J , Barrett G , Mbwana N et al. 2013 . Understanding pregnancy planning in a low-income country setting: validation of the London measure of unplanned pregnancy in Malawi . BMC Pregnancy and Childbirth 13 : 1.doi: 10.1186/1471-2393-13-200 .

Hsiung P-C . 2010 . Reflexivity: The Research Relationship, Lives and Legacies: A Guide to Qualitative Interviewing . https://www.utsc.utoronto.ca/~pchsiung/LAL/reflexivity/relationship , accessed 1 April 2021 .

Knafl K , Deatrick J , Gallo A et al. 2007 . The analysis and interpretation of cognitive interviews for instrument development . Research in Nursing and Health 30 : 224 – 34 .doi: 10.1002/nur.20195 .

Lefevre A , Agarwal S , Chamberlain S et al. 2019 . Are stage-based health information messages effective and good value for money in improving maternal newborn and child health outcomes in India? Protocol for an individually randomized controlled trial . Trials 20 : 1 – 12 .doi: 10.1186/s13063-019-3369-5 .

Malapit H , Sproule K , Kovarik C . 2016 . Using Cognitive Interviewing to Improve The Women’s Empowerment in Agriculture Index Survey Instruments. Evidence from Bangladesh and Uganda . Poverty, Health and Nutrition Division, International Food Policy Research Institute .

Miller K . 2003 . Conducting Cognitive Interviews to Understand Question-Response Limitations . American Journal of Health Behavior 27 : S264 – 72 .

Ogaji DS , Giles S , Daker-White G , Bower P . 2017 . Development and validation of the patient evaluation scale (PES) for primary health care in Nigeria . Primary Health Care Research and Development 18 : 161 – 82 .doi: 10.1017/S1463423616000244 .

Ruel E , Wagner WE , Gillespie BJ . 2016 . The Practice of Survey Research: Theory and Applications. Thousand Oaks, CA: Sage Publications .

Salasibew MM , Filteau S , Marchant T . 2014 . Measurement of breastfeeding initiation: Ethiopian mothers’ perception about survey questions assessing early initiation of breastfeeding . International Breastfeeding Journal 9 : 1 – 8 .doi: 10.1186/1746-4358-9-13 .

Schuler SR , Lenzi R , Yount KM . 2011 . Justification of intimate partner violence in rural Bangladesh: what survey questions fail to capture . Studies in Family Planning 42 : 21 – 8 .

Scott K , Gharai D , Sharma M et al. 2019 . Yes, no, maybe so: the importance of cognitive interviewing to enhance structured surveys on respectful maternity care in northern India . Health Policy and Planning 35 : 67 – 77 .doi: 10.1093/heapol/czz141 .

Sullivan GM . 2011 . A primer on the validity of assessment instruments . Journal of Graduate Medical Education 3 : 119 – 20 .doi: 10.4300/jgme-d-11-00075.1 .

Weeks A , Swerissen H , Belfrage J . 2007 . Issues, Challenges, and Solutions in Translating Study Instruments . Evaluation Review 31 : 153 – 65 .

Willis G . 2014 . Pretesting of health survey questionnaires: cognitive interviewing, usability testing, and behavior coding. In: Johnson TP . (ed). Handbook of Health Survey Methods , First Edition, New York : John Wiley & Sons , Inc. pp. 217 – 42 .doi: 10.1002/9781118594629.ch9 .

Willis GB . 2005 . Cognitive Interviewing: A Tool for Improving Questionnaire Design . Thousand Oaks, CA : SAGE .

Willis GB , Artino AR . 2013 . What do our respondents think we’re asking? Using cognitive interviewing to improve medical education surveys . Journal of Graduate Medical Education 5 : 353 – 6 .doi: 10.4300/jgme-d-13-00154.1 .

Zeldenryk L , Gordon S , Gray M et al. 2013 . Cognitive testing of the WHOQOL-BREF Bangladesh tool in a northern rural Bangladeshi population with lymphatic filariasis . Quality of Life Research 22 : 1917 – 26 .doi: 10.1007/s11136-012-0333-1 .

- world health

- public health medicine

- participation in ward rounds

- measurement error

| Month: | Total Views: |

|---|---|

| May 2021 | 691 |

| June 2021 | 328 |

| July 2021 | 205 |

| August 2021 | 150 |

| September 2021 | 91 |

| October 2021 | 257 |

| November 2021 | 88 |

| December 2021 | 74 |

| January 2022 | 51 |

| February 2022 | 80 |

| March 2022 | 87 |

| April 2022 | 113 |

| May 2022 | 64 |

| June 2022 | 123 |

| July 2022 | 53 |

| August 2022 | 52 |

| September 2022 | 76 |

| October 2022 | 97 |

| November 2022 | 64 |

| December 2022 | 63 |

| January 2023 | 49 |

| February 2023 | 97 |

| March 2023 | 131 |

| April 2023 | 112 |

| May 2023 | 112 |

| June 2023 | 81 |

| July 2023 | 82 |

| August 2023 | 99 |

| September 2023 | 106 |

| October 2023 | 108 |

| November 2023 | 106 |

| December 2023 | 86 |

| January 2024 | 97 |

| February 2024 | 116 |

| March 2024 | 113 |

| April 2024 | 125 |

| May 2024 | 161 |

| June 2024 | 127 |

| July 2024 | 126 |

| August 2024 | 46 |

Email alerts

Citing articles via.

- Recommend to Your Librarian

Affiliations

- Online ISSN 1460-2237

- Copyright © 2024 The London School of Hygiene and Tropical Medicine and Oxford University Press

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Cognitive Interviewing and what it can be used for

Presenter(s): olivia sexton, sophie pilley, jo d’ardenne and richard bull.